Datasets

Managing multiple datasets within a project

A dataset is a collection of JSON documents that can be of different types and have references to each other. You can think of a dataset as a “database” where all of your content is stored, whereas the document‘s types would constitute “tables”. Using GROQ or GraphQL you can always query and join data across documents within a dataset, but not across them. Typical applications of datasets are:

- operate with different environments for testing, staging, and production

- localization and segmentation across all content types

- different purpose content, but with same user access and billing

https://<projectId>.api.sanity.io/v2021-06-07/data/query/<dataset>?query=*

You can also specify which dataset to use with the client libraries (configured when initializing a client) and Sanity Studio (configured in sanity.config.ts or using environment variables).

Dataset management

Datasets can be created and managed using the sanity command-line tool, e.g. by running sanity dataset create <name> or sanity dataset list. To see all dataset-related subcommands, run sanity dataset.

Datasets can also be created and deleted in the project's management console, under the "Datasets" tab.

A dataset name must be between 1 and 64 characters long. It may only contain lowercase characters (a-z), numbers (0-9), hyphens (-), and underscores (_), and must begin and end with a lowercase letter or number.

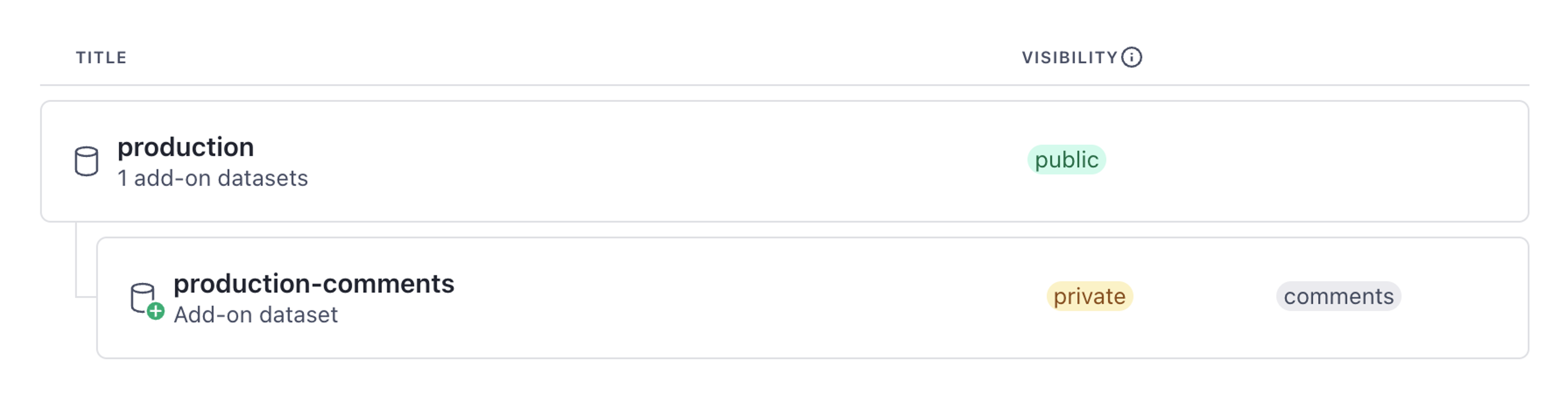

Add-on datasets

Some features automatically create "add-on" datasets and pair them to your dataset. These are complimentary and don't count toward your plan's dataset limit.

You can manage these as you would any other dataset. Learn more about configuring comments and the comments dataset.

Dataset migration

You can export and import content to datasets, as well as performing mutations and patches to documents in them.

Advanced Dataset Management

This is a paid feature

This feature is available on certain Enterprise plans. Talk to sales to learn more.

You can initiate dataset copying directly in the cloud and create aliases to hot swap between datasets without changing the underlying code for your project.

- Full documentation for cloning datasets in the cloud

- Full documentation for hot swapping your datasets without changing your code

Was this page helpful?