Grab your gear: The official Sanity swag store

Read Grab your gear: The official Sanity swag storeThis started as an internal Sanity workshop where I demoed how I actually use AI. Spoiler: it's running multiple agents like a small team with daily amnesia.

Vincent Quigley

Vincent Quigley is a Staff Software Engineer at Sanity

Published

Until 18 months ago, I wrote every line of code myself. Today, AI writes 80% of my initial implementations while I focus on architecture, review, and steering multiple development threads simultaneously.

This isn't another "AI will change everything" post. This is about the messy reality of integrating AI into production development workflows: what actually works, what wastes your time, and why treating AI like a "junior developer who doesn't learn" became my mental model for success.

The backstory: We run monthly engineering workshops at Sanity where someone presents what they've been experimenting with. Last time was my turn, and I showed how I'd been using Claude Code.

This blog post is from my presentation at our internal workshop (10-min recording below).

My approach to solving code problems has pivoted four times in my career:

For the first 5 years, I was reading books and SDK documentation.

Then 12 years of googling for crowd-sourced answers.

It was 18 months of using Cursor for AI-assisted coding

And recently, 6 weeks of using Claude Code for full AI delegation

Each transition happened faster than the last. The shift to Claude Code? That took just hours of use for me to become productive.

Here's what my workflow looks like now, stripped of the hype. I use AI mostly "to think with" as I'm working with it towards the code that ends up in production.

Forget the promise of one-shot perfect code generation. Your job as an engineer is to find the best solution for the problem, not just write a bunch of code.

Then you take the learnings from this attempt and feed it back.

This isn't failure; it's the process! Expecting perfection on attempt one is like expecting a junior developer to nail a complex feature without context.

The biggest challenge? AI can't retain learning between sessions (unless you spend the time manually giving it the "memories"). So typically, every conversation starts fresh.

My solutions:

Create a project-specific context file with:

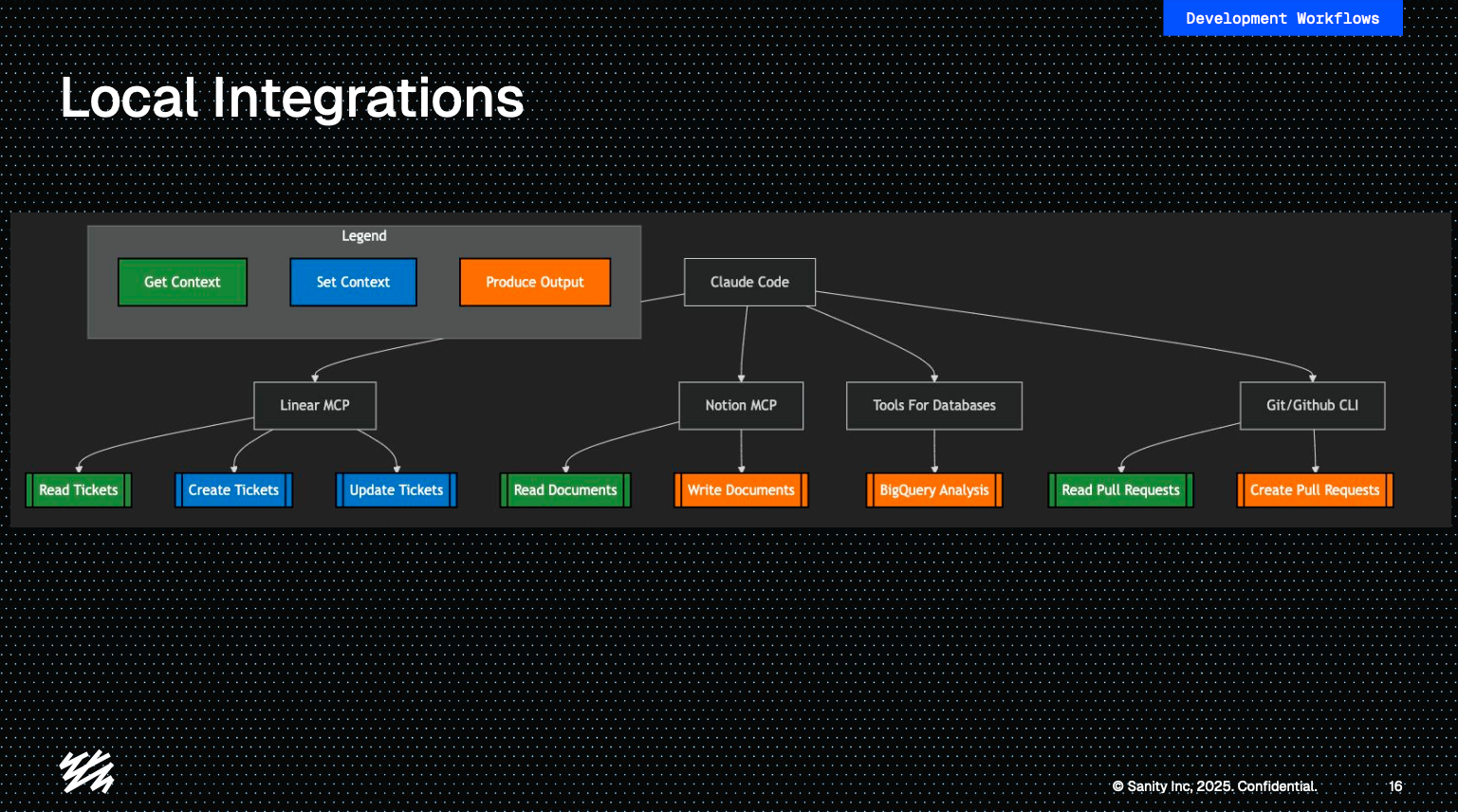

Thanks to MCP integrations, I can now connect my AI to:

Without this context, you're explaining the same constraints repeatedly. With it, you start at attempt two instead of attempt one.

I run multiple Claude instances in parallel now, it's like managing a small team of developers who reset their memory each morning.

Key strategies:

Writing code is one part of the job, but so is reviewing code. Adopting AI has evolved my code review process as well.

This saves me and my peers time and extra rounds.

At Sanity, our policy is that the engineer is responsible for the code they ship, even if it's AI generated. I want to make sure that I ship:

The key take away: I'm more critical of "my code" now because I didn't type out a lot of it. No emotional attachment means better reviews.

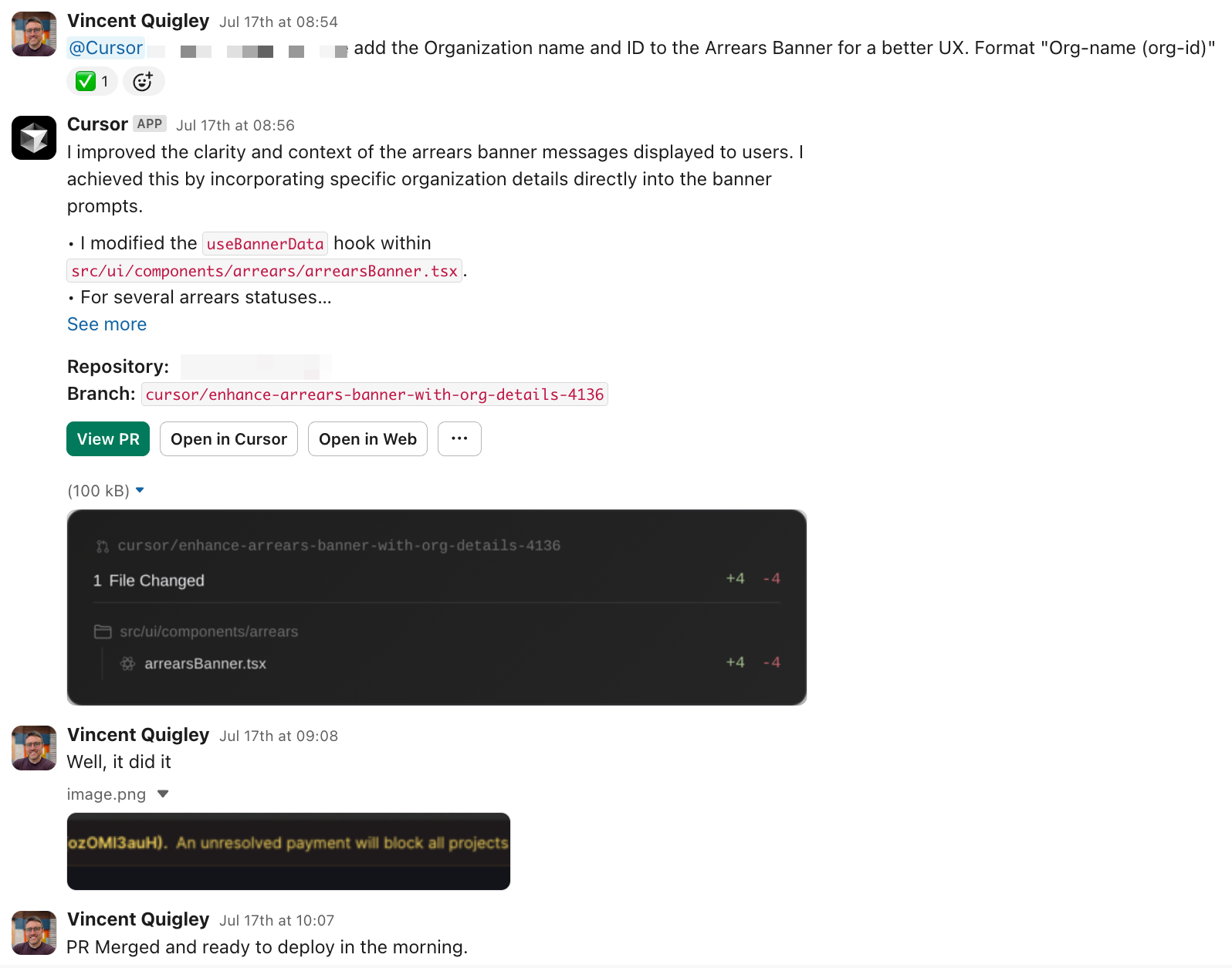

We're testing Slack-triggered agents using Cursor for simple tasks:

Current limitations:

But the potential? Imagine agents handling your backlog's small tickets while you sleep. We're actively exploring this at Sanity, sharing learnings across teams as we figure out what works.

Let's talk money. My Claude Code usage costs my company not an insignificant percent of what they pay me monthly.

But for that investment:

The ROI is obvious, but budget for $1000-1500/month for a senior engineer going all-in on AI development. It's also reasonable to expect engineers to get more efficient with AI spend as they get good with it, but give them time.

Not everything in AI-assisted development is smooth. Here are the persistent challenges I find myself in:

The learning problem

AI doesn't learn from mistakes. You fix the same misunderstandings repeatedly. Your solution: better documentation and more explicit instructions.

The confidence problem

AI confidently writes broken code claiming that it's great. Always verify, especially for:

The context limit problem

Large codebases overwhelm AI context windows. Break problems into smaller chunks and provide focused context.

The hardest part? Letting go of code ownership. But now I don't care about "my code" anymore; it's just output to review and refine.

This detachment is actually quite liberating!

If a better AI tool appears tomorrow, I'll switch immediately. The code isn't precious; the problems we solve are.

If I were to give advice from an engineer's perspective, if you're a technical leader considering AI adoption:

The engineers who adapt to the new AI workflows will find themselves with a new sharp knife in their toolbox: They're becoming orchestrators, handling multiple AI agents while focusing on architecture, review, and complex problem-solving.

Pick one small, well-defined feature. Give AI three attempts at implementing it. Review the output like you're mentoring a junior developer.

That's it. No huge transformation needed, no process overhaul required. Just one feature, three attempts, and a honest review.

The future isn't about AI replacing developers. It's about developers working faster, creating better solutions, and leveraging the best tools available.

👋 Knut from the developer education team here: if you're curious why Sanity makes AI-assisted development particularly effective: it's all code-based configuration. Schemas, workflows, and even the editorial UI are defined in TypeScript, which means AI tools can actually understand and generate the entire stack. No clicking through web UIs to configure things. Here's a course on that specific workflow if you want to go deeper.

And back to our regular programming.

Content operations

Content backend

The only platform powering content operations

By Industry

Tecovas strengthens their customer connections

Build and Share

Grab your gear: The official Sanity swag store

Read Grab your gear: The official Sanity swag store