Text embeddings definition

Text embeddings convert words, sentences, or documents into numeric vectors that capture meaning, enabling semantic search, recommendations, classification, and retrieval for AI applications across content.

What are text embeddings?

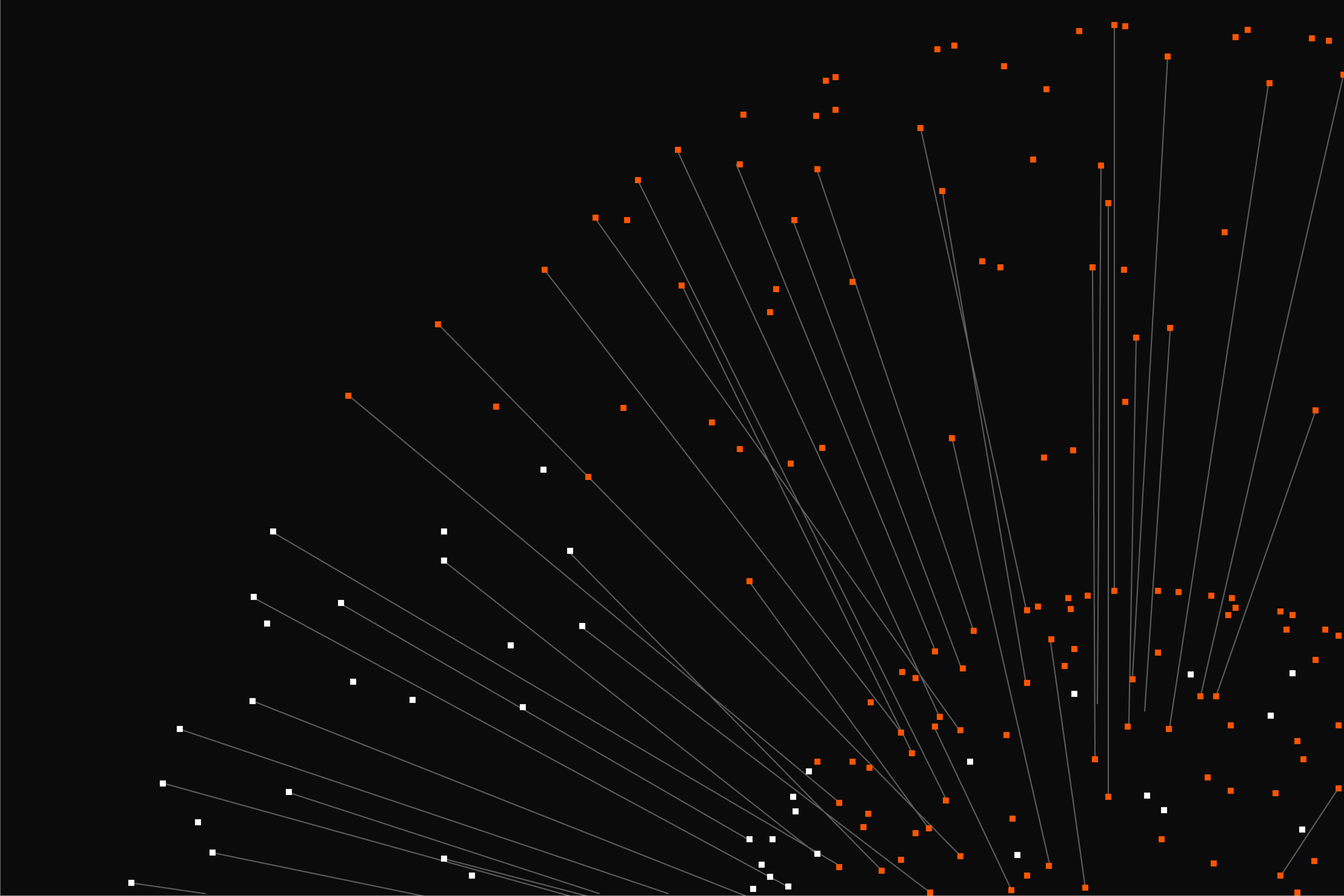

Text embeddings are numerical representations of language. They turn words, sentences, or whole documents into coordinates in a high‑dimensional space (often called a vector). In that space, items with similar meaning sit closer together, so a system can tell that “doctor” and “physician” are related even if the exact words differ.

Modern AI models (like transformers) learn these vectors from large amounts of text, capturing context and nuance. This lets software perform semantic search, detect near‑duplicates, group similar content, and improve recommendations or classification. Compared with simple keyword counts, dense embeddings capture meaning, not just word matches, making interactions with text feel smarter and more relevant.

How text embeddings work in simple terms

Think of an embedding as a compact numeric “fingerprint” of meaning. During training, a model reads huge amounts of text and learns that words and phrases used in similar contexts should have fingerprints that sit near each other. Over time, it becomes good at placing “doctor” close to “physician,” and “bank (river)” apart from “bank (finance)” by looking at the surrounding words and sentences.

When you use it, an encoder turns your query or document into a vector. The system then compares this vector to others using a similarity score (often cosine similarity) and returns the nearest neighbors. This powers semantic search, clustering, and recommendations without relying on exact keyword matches.

Where they’re used: search, recommendations, and question answering

In search, embeddings turn your query into a vector and retrieve meaningfully similar passages—great for handling synonyms and phrasing differences across documents, emails, or tickets. In content systems like Sanity, embeddings support semantic reference search and smarter auto‑tagging, so editors can link related content and keep taxonomies consistent without chasing exact keywords.

For recommendations, vectors help surface similar articles, products, or videos and catch near‑duplicates to reduce clutter. In question answering, they power Retrieval‑Augmented Generation (RAG): the system finds the closest supporting passages and feeds them to a model to craft a grounded answer. Many pipelines add reranking to pick the most relevant snippet before responding, improving help centers and chat assistants.

Explore Sanity Today

With Text embeddings under your belt, it's time to see what Sanity can do for you. Explore our features and tools to take your content to the next level.