Grab your gear: The official Sanity swag store

Read Grab your gear: The official Sanity swag store“AI-ready content” means at least three different things. This field guide helps you tell them apart, then shows you what to actually build.

Knut Melvær

Principal Developer Marketing Manager

Updated

"AI-ready content." Everyone agrees you need it. Nobody agrees on what it means. AEO strategies (or GEO, or 'SEO for AI'), llms.txt debates, Cloudflare shipping markdown at the edge, agents that negotiate content types. The conversation is getting louder, and most of it conflates at least three different questions.

How do you get AI to cite and recommend your content? That's the positioning question, and it has two parts. Namely, what your content looks like when models are trained on it, and when agents retrieve it to answer a prompt.

The other question is about when an agent does show up, how do you serve your content without bloating its context window or losing meaning in translation? That's the content consumption question. Your content now has consumers you didn't design for: agents requesting your pages, humans copying your docs into Claude, RAG pipelines pulling your content into retrieval systems. None of them want your cookie banners, navigation chrome, or ad scripts. They want the content. And probably only the content that actually provides the answers they’re looking for.

And then: does agentic content consumption affect positioning? Does serving cleaner content to agents also improve how they represent you?

If you're a developer, the content consumption section is where the actionable stuff lives. If you're a content strategist, the positioning question is more your territory. Read both. You'll need to explain the other one to your team.

Let's start with Agent Engine Optimization (AEO, sometimes called GEO or "SEO for AI"), since that's what got everyone's attention: can you optimize your content so AI models cite you more?

Maybe. But the honest answer is: we don't really know yet.

One group that's been looking closely at this is Profound, a company that tracks how AI platforms cite and recommend content across ChatGPT, Perplexity, and Gemini. They've been publishing primary research on the topic.

In their latest study, they took 381 pages across 6 websites, randomly assigned half to serve markdown and half to serve HTML, and watched for three weeks. The result? They found no statistically significant increase in bot traffic from serving markdown. Their recommendation? Focus on fundamentals: quality content, clear structure, fast load times. "The format you serve them in? Probably not the leverage point you're looking for."

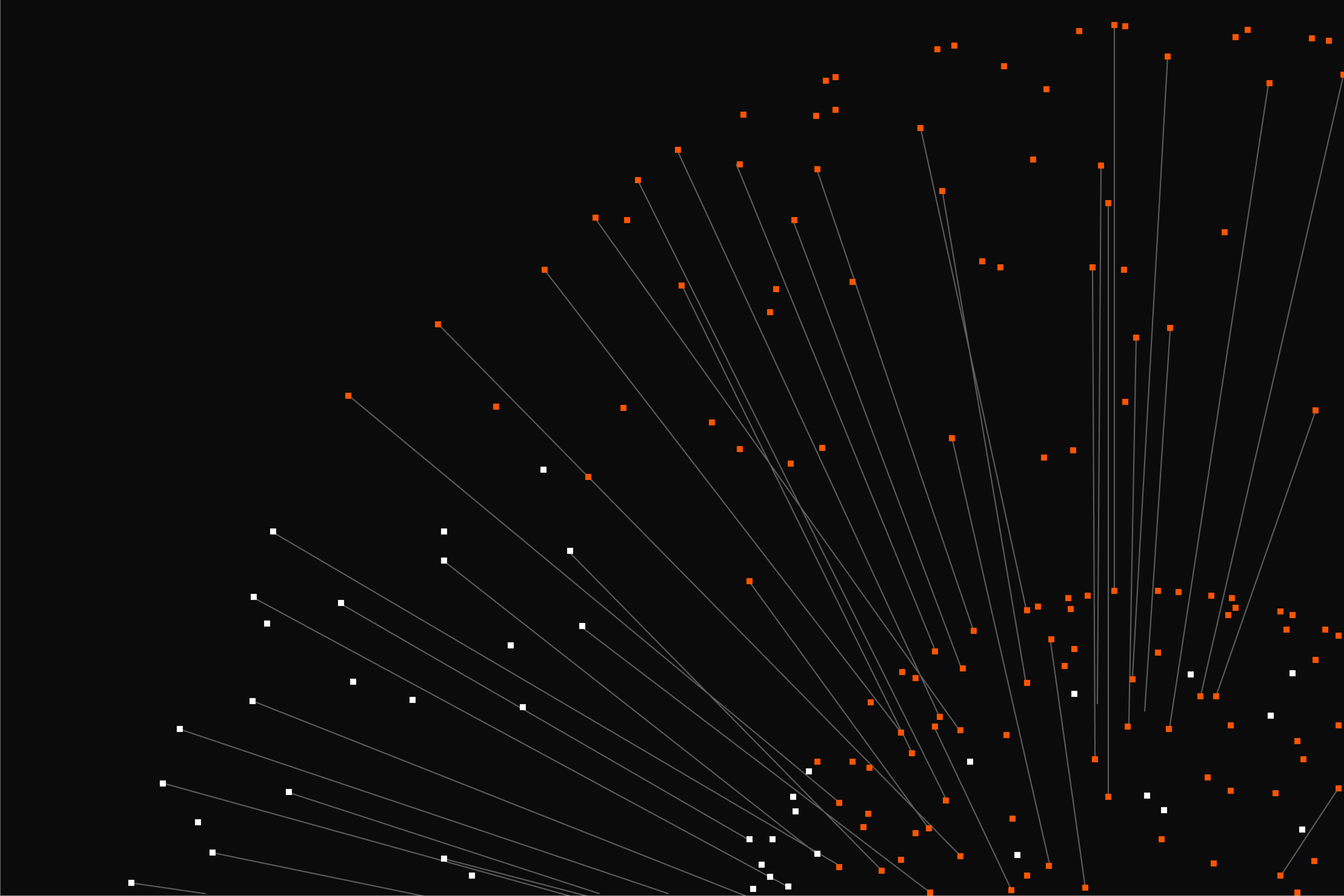

Their most important finding for anyone building an AEO strategy is that AI citations can shift by up to 60% in a single month. The page ChatGPT recommends today might not be the one it recommends next month.

If you're trying to "optimize" for a system that volatile, you're chasing a moving target.

There's academic work too. A paper from Princeton (published at KDD 2024, which feels like three decades ago in the AI-timeline) found that certain strategies (adding statistics, using authoritative language, citing sources) could boost visibility by up to 40% in their benchmark. Worth noting: those numbers come from lab conditions, not from testing against ChatGPT or Perplexity in the wild. The strategies themselves are basically good writing advice, which is worth doing regardless of AI.

Meanwhile, the models themselves are getting better at handling whatever you throw at them. Anthropic just released dynamic filtering for Claude's web search. The model now writes Python code to parse and filter HTML results before they hit the context window. The result: 11% better accuracy, 24% fewer input tokens. The models are investing heavily in solving the "finding you" problem on their end.

So while the models will get better at finding you, they won't necessarily get that much better at your content being clean. Which brings us to the part where you have agency.

Profound (the AI citation tracking company) measured bot visits, how often agents show up. They found format doesn't change that. Agents show up regardless. Cool.

If agents are already showing up (and they are, check your server logs), then the question isn't how to attract them. It's what you serve them when they arrive. And not just agents: humans are copying your docs into AI tools, RAG pipelines are pulling your content into retrieval systems, edge services are converting your pages on the fly.

The strategies here are practical, the evidence that this might matter is strong, and you control the outcome.

Here's what the options look like, from zero effort to full infrastructure investment.

Agents will convert your HTML to markdown themselves. Every major AI tool (Claude, ChatGPT, Gemini, Perplexity) does this internally. Most AI crawlers don't even execute JavaScript: they see your raw HTML, not your rendered page. Claude Code uses a library called Turndown. It works. It's also lossy and token-expensive. Your 100K-token HTML page becomes maybe 3K tokens of useful content after the agent strips out navigation, footers, scripts, and cookie banners. That's a 97% waste of context window. Even the agents that do render JavaScript (like Google's crawler or ChatGPT's Operator) still get the full DOM with all the navigation chrome. The token waste problem doesn't go away just because JS executes.

It gets worse if your site relies on client-side rendering. Vercel's research found that ChatGPT and Claude crawlers fetch JavaScript files but don't execute them. Google's Gemini (via Googlebot) and AppleBot are the exceptions. Even the agents that do render JavaScript still get the full DOM, navigation and all. The token waste problem doesn't go away just because JavaScript executes.

Llms.txt is a markdown file at a known URL (/llms.txt) that gives agents an overview of your site with links to detailed content. Over 2,000 sites have adopted it, including Next.js, shadcn/ui, TanStack, Cloudflare, and Hugging Face. It's simple to implement and useful as a discovery layer. Anthropic uses theirs as a lightweight sitemap: brief descriptions and links organized by section, 892 tokens total. Even the company building the agents treats llms.txt as an index, not a content dump.

But isn't llms.txt becoming a standard? It's becoming adopted, which isn't the same thing. llms.txt is a proposal from Jeremy Howard's FastHTML project, not a ratified standard. The GitHub issues show debates about merging it with other proposals (AGENTS.md), and scope creep into things like crypto wallet addresses and "emotional brand positioning extensions." More practically: it's all-or-nothing. An agent gets your entire corpus or nothing. No per-page granularity, no per-agent control, no governance over what gets consumed. For developer docs, that's probably fine. For anything you'd rather serve selectively, you've just made it trivially easy to copy-paste everything.

The spec also proposes llms-full.txt, a companion file containing your entire corpus as markdown. In theory, agents can grab everything at once. In practice, the numbers work against you. Cloudflare's developer docs produce an llms-full.txt of 46.6MB, roughly 12 million tokens, about 60x Claude's context window. Even when the file fits, longer context degrades model performance regardless of content quality. An agent that needs one answer doesn't benefit from receiving your entire library.

If you want a quick maybe win, add an llms.txt. If you want control over what agents get and when, content negotiation gives you more options (more on that below).

Cloudflare launched Markdown for Agents in February 2026: a dashboard toggle that converts your HTML to markdown at the edge when agents request it. No code changes. 80% token reduction on their own blog. It's a good default if you can't touch your content layer.

The tradeoff: reverse-engineering HTML back to markdown is inherently lossy. A generic parser doesn't know which parts of your page are content and which are chrome. Custom components, structured relationships, section-level meaning: none of it survives the round trip.

Agents have started to request markdown from you using the Accept header. So when an agent sends Accept: text/markdown, your server could respond with markdown. Same URL, different representation. The same content negotiation pattern HTTP has supported for decades. I built a Sanity course around this, and we use it for Sanity Learn itself.

It doesn’t take a lot of code if you use Sanity already, with the @portabletext/markdown library you can the same content that renders to HTML to markdown:

// md/[section]/[article]/route.ts

export async function GET(

request: NextRequest,

{ params }: { params: Promise<{ section: string; article: string }> },

) {

const { section, article } = await params;

const doc = await fetchArticle(section, article);

const markdown = toMarkdown(doc.body);

return new Response(markdown, {

headers: {

"Content-Type": "text/markdown; charset=utf-8",

},

});

}Most frameworks and hosting platforms lets you define URL rewrites too, here is how content negotiation looks like in Next.js:

// next.config.ts

rewrites: () => ({

beforeFiles: [

// Accept header negotiation

{

source: "/docs/:section/:article",

has: [

{

type: "header",

key: "accept",

value: "(.*)text/markdown(.*)"

}

],

destination: "/md/:section/:article",

},

],

});On our learning platform, the same lesson page goes from 392KB of HTML (~100K tokens) to 13KB of markdown (~3,300 tokens). That's a 97% reduction, and it's not lossy, because the markdown is generated from the structured source, not reverse-engineered from HTML.

curl -sL \ https://www.sanity.io/learn/course/markdown-routes-with-nextjs/portable-text-to-markdown \ | wc -c # ~401,000 bytes

curl -sL -H "Accept: text/markdown" \ https://www.sanity.io/learn/course/markdown-routes-with-nextjs/portable-text-to-markdown \ | wc -c # ~13,000 bytes

And because the content is structured, you can serve it at multiple levels of granularity. An agent landing on any lesson finds links to the full course, a sitemap of all content, and the complete corpus. It picks the level that matches its task: one lesson for a specific question, a full course for context, the sitemap for discovery. Same content, four levels of access, all from the same structured source.

The granularity matters because of how agents actually work. AI coding agents are already requesting markdown. Remotion, Bun, and nuqs serve it. (For the curious: Claude Code currently sends Accept headers that omit text/html. The markdown preference is already baked in.) When a server responds with Content-Type: text/markdown, Claude Code skips its summarization step entirely. Your content goes straight to the model, verbatim. That's a fast path you earn by serving clean content.

The furthest end of the spectrum: skip the web entirely. Rely on agents query your content through tools: GROQ queries, GraphQL, the MCP server. No HTML-to-markdown conversion needed because there's no HTML involved. This is the most powerful approach but requires the most integration work. It's real and growing, but early for most sites.

And it also requires your users to install or instruct agents to do it this way, which might be fine if you are a SaaS company with users who are proficient in AI tools, but not realistic for most of the rest of us.

Worth mentioning because it shows the demand from the human side too. Cursor, Vercel, and Remotion docs all have "copy page as markdown" buttons. The popular documentation platform Mintlify comes with it out of the box too. Cursor has "Open in ChatGPT" and "Open in Claude" buttons. We also have them on our docs and learning platform. Developers are manually doing what agents should get automatically. The frugality argument isn't just about bots.

Each strategy in that spectrum asks something different from your content system.

| Strategy | What your system needs |

|---|---|

| Do nothing | Nothing. Agents handle conversion. |

| llms.txt | A way to export all your content as markdown |

| Cloudflare edge | Cloudflare hosting (conversion is lossy) |

| Markdown routes | Structured content that serializes to markdown |

| MCP / API | Structured content + query language + governance |

Notice the pattern. The further right you go, the more your system needs to treat content as queryable data rather than documents to be parsed. If your content lives as markdown files in a repo, you can serve markdown, but that's all you can serve. You can't query it ("give me all articles tagged X published this quarter"). You can't serve HTML to browsers and markdown to agents and JSON to your mobile app from the same source without building a (gnarly) pipeline for each. We covered this deeper in “You should never build a CMS.”

Markdown was designed as a “writing format” back in the days when HTML was the only destination. (Gruber even offered a .text suffix to view the markdown source of each page. Content negotiation before we called it that.) We see it as a serialization format, not a content storage format that scales. (LLMs are great at SQL too, that doesn’t mean you store your database as .sql files in git.)

The systems that serve agents best are the ones where content is structured, governed, and queryable. That's true for markdown routes, and it's even more true as agents move from fetching web pages to accessing content directly through tools and APIs. That's a bigger conversation, one that touches how organizations manage knowledge for both humans and machines. But it starts with the same infrastructure question.

Half of the specific tools I just mentioned will look different in six months. New standards will emerge. Agents will get smarter. Claude's dynamic filtering shipped today. Chrome is previewing WebMCP, a proposal that lets websites expose structured tools directly to in-browser AI agents, so they can call functions instead of scraping pages. By the time you read this, there's probably something newer. The landscape is genuinely moving fast.

But the mental model holds. The difference between "positioning yourself for AI discovery" and "being intentional about how your content gets consumed" isn't going away. They're different questions with different evidence bases and different levels of maturity. Knowing which one you're solving for changes what you should invest in.

If this sounds familiar, it should. Serving the right format to the right consumer is multichannel distribution. Developers have been doing this for years: HTML for browsers, JSON for mobile apps, RSS for feed readers. AI agents are just the newest channel. The durable investments are the same ones that have always mattered: clean content structure, standards-based content negotiation, governance over what gets published where. These pay off regardless of which specific tools win.

Here's what I'd tell a team asking me where to start.

Start with your server logs. Are agents already visiting? What are they requesting? You might be surprised. Then serve markdown to agents that ask for it. If an agent sends Accept: text/markdown, respond with markdown. It's a decades-old HTTP pattern, and it's the highest-ROI move for most sites right now.

Beyond that:

Probably don’t do llms.txt. Dumping your whole corpus into a markdown file is probably just going to lead to context bloat and “semantic collapse.” It seems like agents prefer more curated and specific content depending on the intent they’re resolving for.

Watch the positioning space, and start to bet on it. Follow Profound's research. They're doing the most focused work in this space. When there's proven methodology for getting cited by AI search, invest in it. Until then, assume that the answer is going to be “serve quality content that fulfills what your audience might be looking for.”

Think about governance. Know which bots are visiting and what they're doing. Dark Visitors tracks 80+ AI-specific bots across 15 categories. Your robots.txt probably needs updating.

If you're building with Sanity, we've published SEO and AEO best practices as an agent skill that agents can use directly when implementing these patterns. It includes EEAT principles, structured data guidance, and technical SEO checklists, as well as the emerging best practices for AEO.

And invest in your content structure. It's the unsexy one, but it's the one that compounds. If your content is structured, markdown is just another output. So is HTML. So is JSON. So is whatever format the next generation of agents will want. You're not optimizing for AI. You're doing what good content infrastructure has always done: serving the right thing to whatever shows up asking for it.

Which brings us back to the third question: does serving cleaner content to agents also help you get cited? We don't know yet. Profound's data suggests format alone isn't the lever, but the research is young and the landscape is moving fast. What we do know is that the content consumption work pays off on its own. And if the connection to positioning turns out to be real, you're already there.

The next time someone tells you to make your content "AI-ready," ask them which kind. The answer changes the conversation.

Content backend

The only platform powering content operations

Tecovas strengthens their customer connections

Build and Share

Grab your gear: The official Sanity swag store

Read Grab your gear: The official Sanity swag store// md/[section]/[article]/route.ts

export async function GET(

request: NextRequest,

{ params }: { params: Promise<{ section: string; article: string }> },

) {

const { section, article } = await params;

const doc = await fetchArticle(section, article);

const markdown = toMarkdown(doc.body);

return new Response(markdown, {

headers: {

"Content-Type": "text/markdown; charset=utf-8",

},

});

}// next.config.ts

rewrites: () => ({

beforeFiles: [

// Accept header negotiation

{

source: "/docs/:section/:article",

has: [

{

type: "header",

key: "accept",

value: "(.*)text/markdown(.*)"

}

],

destination: "/md/:section/:article",

},

],

});curl -sL \

https://www.sanity.io/learn/course/markdown-routes-with-nextjs/portable-text-to-markdown \

| wc -c

# ~401,000 bytescurl -sL -H "Accept: text/markdown" \

https://www.sanity.io/learn/course/markdown-routes-with-nextjs/portable-text-to-markdown \

| wc -c

# ~13,000 bytes