The pragmatist's guide to AI-powered content operations

Stop chasing AI strategies. Start eliminating expensive content chores. A practical 30-day guide to implementing AI that delivers measurable value.

Knut Melvær

Head of Developer Community and Education

Published

Imagine this: Your content team spent three hours yesterday updating metadata across 50 product pages. They'll spend three hours tomorrow doing it again for a different category. By Friday, they'll have invested 15 hours on work that nobody will notice unless it's wrong.

And you might be thinking: "This seems like something we could automate. We need an AI strategy."

You're absolutely right!

But not in the way most companies approach it. You don't need a strategy workshop. You need to get practical.

Meanwhile, content teams feel the AI pressure. Some respond with professional resistance because they recognize it's hard to translate the coordination and context of their work into a chat box. Others have tried AI and found it underwhelming.

But as technologists, we know generative AI is powerful and capable. So how do you get your team to shift their perspective? How do you introduce AI without joining the majority of pilots that stall or fail?

This guide and the AI-readiness test helps you assess your content system's AI readiness and shows you how to run a 30-day pilot that gives you measurable business outcomes.

Want to know if your CMS architecture can actually eliminate these chores?

Find out if your CMS has the architectural foundation to eliminate content chores, or if you're building on infrastructure that can't support AI operations.

You'll get:

- Complete 30-day implementation roadmap for your first chore

- The 15-point AI Readiness assessment tool

- Function recipe for automated metadata generation

- Measurement framework for business outcomes

- Community resources

By submitting this form, you confirm that you have read and understood Sanity's Privacy Policy. This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Do first. Strategize later.

It's easy to fall into the trap: The "we need to figure out AI" question turns into strategy workshops and endless meetings to align the organization. Meanwhile, nothing ships.

Here's the thing though:

The companies succeeding with AI don't start with "How do we AI?". They start with "What's blocking our team?" They pick one repetitive problem and eliminate it. Then they move to the next one. They are going deep and specific.

The difference is fundamental. It's the difference between "We need an AI strategy" and "We need to stop spending three hours on metadata every day." Between transformation theater and actual improvement. Between expensive pilots that stall and tactical wins that compound.

Working with AI is a skill. It's an adventure. You have to get your feet wet and you have to get the repetitions in. But having a plan helps. And solving a concrete problem is how you build that invaluable experience and establish trust in the technology.

When travel-tech startup loveholidays scaled from managing 2,000 hotel descriptions to 60,000, their content team didn't grow. Their content operations did. Mike Jones, their CTO, shared this story at the Everything *[NYC] conference. They automated the repetitive work. And it increased conversion, because the quality was improved especially on the long-tail of less popular offerings.

“All 60,000 of those hotels are now managed by that same content team.” —Mike Jones, CTO of loveholidays

They didn't start with an AI strategy. The team at loveholidays identified an expensive operational chore. Writing and maintaining tens of thousands of hotel descriptions. And eliminated it systematically. Same content strategy throughout. Systematically better operations.

This distinction between content strategy and content operations matters because most AI initiatives fail at this boundary. Content strategy is what you decide to create and why: which topics to cover, what messages to communicate, how to position your brand. It requires business judgment, market understanding, and creative thinking.

Content operations is how you execute that strategy, which can be:

- tagging and categorizing content

- linking and cross-referencing

- validating against guidelines

- translating and localizing

- optimizing for search and accessibility

- managing workflows and approvals

- publishing and distributing

It’s within these points, you'll find that every content team has a backlog of operational work that blocks strategic execution:

"We can't launch in new markets fast enough because translation is bottlenecked."

"Our style guide compliance is inconsistent because manual checking is impossible at scale."

"We're spending more time formatting and distributing than actually creating."

These aren't content creation problems. They're operations problems. And this is where you are going to start looking for opportunities to get practical with AI.

Don't ask your team: "Can we use AI for content?"

Ask them: "Which of our our content operations are creating friction for us and blocking our business?"

Starting with this question changes everything. You're not chasing AI adoption metrics. You're solving specific operational problems that happen to be solvable with AI. And those should be measurable by business outcomes.

Does your CMS architecture support AI?

Here's what we see: your CMS architecture determines AI success more than the AI itself. The most sophisticated AI hitting a legacy content architecture is like putting a Ferrari engine in a horse carriage. Sure, it has power. But it's still fundamentally the wrong vehicle.

Before you can introduce AI-powered content operations, you need to understand to which degree your content infrastructure supports it.

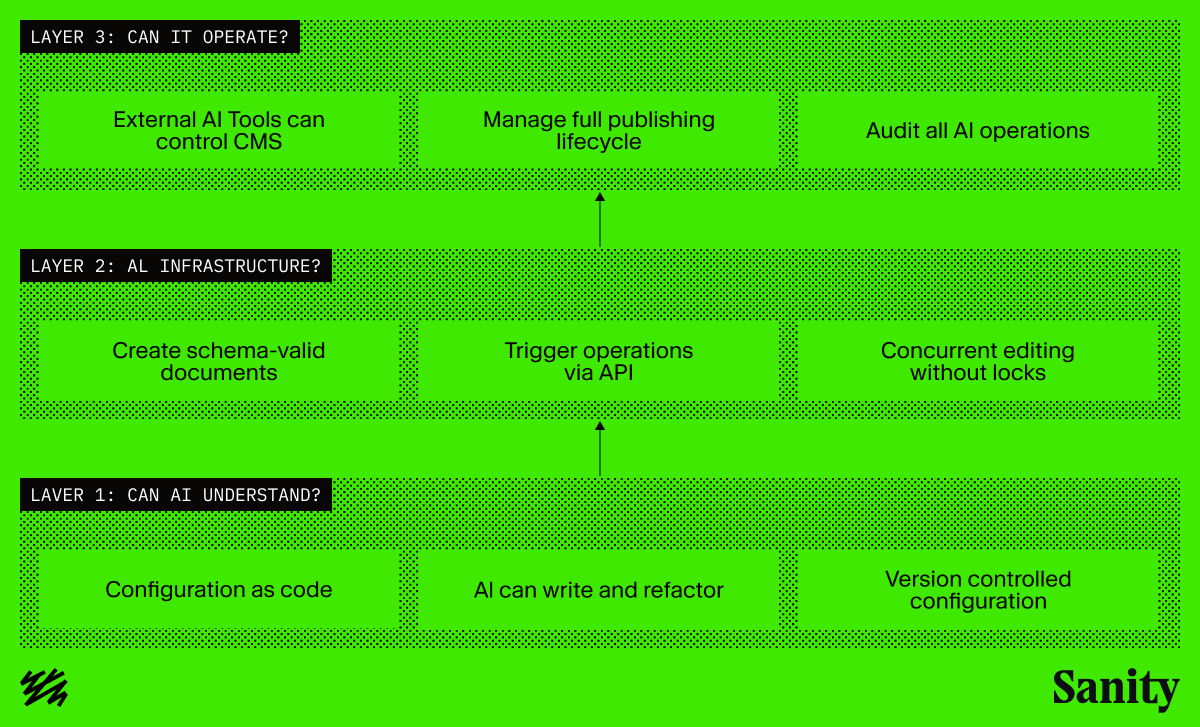

The assessment explores the three levels of succeeding with AI-powered content operations, each building on the previous one.

Layer 1: Can AI understand your CMS?

This is about whether AI can even comprehend how your content is structured. Try this test: Ask Cursor or Claude Code to help you create a content model for a blog with authors, categories, and SEO metadata.

For UI-based CMSes, you get instructions like: "Navigate to Content Model section, click 'Create New Content Type', add field 'title', select type 'Short text'..." AI can write these instructions, but it can't execute them. It can't test them. It can't version control them. When something breaks, AI can't see what's wrong because the configuration lives in a database somewhere, not in code.

When configuration is code, AI can generate and update complete working schemas. It can suggest validation rules based on requirements. It can refactor schemas when requirements change. It can spot issues. You can ask "why isn't this working?" and paste actual code. It can use schema code as context to generate queries, frontend components, and migration scripts.

import {defineType, defineField} from 'sanity'

export const productType = defineType({

name: 'product',

title: 'Product',

description: 'A coffee product offered by the roastery',

type: 'document',

fields: [

defineField({

name: 'name',

title: 'Name',

type: 'string',

validation: Rule => Rule.required(),

}),

defineField({

name: 'origin',

title: 'Origin',

description: 'Where the coffee was grown',

type: 'reference',

to: [{type: 'origin'}],

}),

defineField({

name: 'tastingNotes',

title: 'Tasting notes',

description: 'Short flavor descriptors like “floral”, “citrus”, “chocolate”',

type: 'array',

of: [{type: 'string'}],

}),

],

})Above is an example of an AI-generated Sanity Studio schema type for "an small coffee roastery." With Sanity, you will get an instant preview of the editorial interface so you can validate that the editorial experience is what you want to ship to your organization. Making the feedback loop very fast.

Layer 2: Does your CMS have AI infrastructure?

Understanding your content model is necessary but not sufficient. Can your CMS actually execute AI operations, or is it cosplaying as AI-powered with expensive autocomplete? Most CMS APIs force you to become an AI orchestrator, juggling multiple endpoints, managing state, handling auth tokens.

The problematic patterns: Platforms that let you configure AI through their API but force execution through the web interface only. No SDK methods, no completion webhooks, no way to know when AI finishes. Or platforms where changing a prompt requires a full build-deploy-restart cycle. Or systems built on traditional request-response cycles that hit hard limits when processing content at scale. Try to enhance 1,000 documents and you're fighting timeouts, memory limits, and document locking issues.

What separates platforms that claim "AI integration" from those built for AI: schema-aware AI that understands your content model natively. AI should be able to generate, translate, and transform documents of structured content, and not just act on individual isolated fields.

At the Everything *[NYC] workshop, developers built production-ready implementations in one day. Brand guideline validators, recursive translation workflows, OG image generators. These weren't toy demos. They were solving real business challenges because the infrastructure made AI integration straightforward rather than heroic.

Layer 3: Can AI operate on your content?

The final test: can external AI tools control your CMS, or are you locked into whatever your vendor decides to build?

AI capabilities are evolving faster than any single vendor can keep up with. If you can only use your CMS vendor's built-in AI, you're betting they'll always have the best AI, forever. That's not a good bet.

Organizations with API-first architectures can plug into any AI tool or workflow. Those with UI-first architectures are stuck waiting for their vendor to integrate each new AI capability.

Having a MCP server is a good start. But does it only let AI query content or update it as well? Can it interact with other platform features like content releases? And are there abstractions that lets AI operate on structured content, or is it up to you to tackle the complexity of building a schema valid piece of content, and not just operate on a field-by-field basis?

Your assessment score

Make sure you have taken the full 15-point assessment. This will give you an idea if your current content system is cutting it for AI. If you find your system lacking, you might still be able to run the AI pilot, but make a point of documenting the friction points.

Your score tells you where you are:

12–15 points: AI-native architecture. You have true AI infrastructure, not just features. The implementations in this guide are achievable with weeks of work, not months.

8–11 points: AI-capable with gaps. You have some infrastructure but significant limitations. You're probably building workarounds for missing pieces. Consider whether those gaps are architectural or just missing features.

4–7 points: AI features, not infrastructure. Your CMS has added AI capabilities on top of legacy architecture. You're getting some value but missing the transformative potential. Many recommendations in this guide will be difficult or impossible.

0–3 points: AI theater. Your "AI-powered" CMS is mostly marketing. The architecture wasn't built for AI and it shows. You're likely spending more time working around limitations than getting value from AI.

If you scored below 8, you have a strategic decision to make. The honest answer: if your infrastructure isn't ready, AI initiatives will fail. Not because AI doesn't work, but because your architecture prevents AI from working. You can try to implement AI on inadequate infrastructure and join the 95% with no measurable impact, or you can fix the foundation first.

Do by Friday

Run the complete 15-point assessment. Document your score and specific gaps.

Share results with your CTO by Friday with a clear question: "Are these gaps architectural, or can our platform evolve to support this?"

Of course, if your CTO answers "no", then you can always reach out to us for advice.

If you scored more than 7, then you're probably ready to dive in and automate your first content operations chore. We'll factor in a month, but you might be able to do it way faster than that!

Your first 30 days: Eliminating one expensive chore

Start with one expensive content chore. The atomic improvement approach: Identify one specific, measurable, expensive chore. Eliminate it. Measure the impact. Share the results. Repeat.

The compound effect of small, specific improvements beats the ambitious transformation project every time. This is how loveholidays went from 2,000 to 60,000 hotel descriptions. This is how The Metropolitan Museum of Art improved style guide compliance without adding headcount. This is how Sanity Learn manages 100,000 learners with a one-person team. This is how Complex Networks are able to provide relevant product recommendations to their editorial content at scale.

The pilot

This is the chore we are going to eliminate: Contextual product recommendations in content.

This is the highest-success-rate first project because it's repetitive, rule-based, time-consuming, measurable, and directly impacts revenue. Every media or commerce brand with content and products has this problem.

If you don't have products, you can adapt this exercise to be any other "related" content. For example, if you do a lot of editorial content, then it can be a "related stories" implementation. Or if you are running an agency website, it can be "related services" to your customer stories or the like.

Week 1: Audit your current process

Before you build anything, understand exactly what you're eliminating. Track one week of metadata work across your content team. How many pieces of content need metadata? How long does it take per piece? What's the consistency rate? What gets skipped when deadlines loom?

Not sure which chore to target first? Ask your content team what they copy-paste repeatedly. That's automation waiting to happen. Watch for manual data entry patterns where editors transcribe information from one place to another. Listen for complaints about tedious tasks. Track what gets skipped or done poorly when deadlines loom. These signals reveal expensive chores blocking your team.

For inspiration and buy-in, you point to how Complex Networks actually did this (you can read the case study here). You might have to translate this to your reality, but the principles should be fairly straight forward.

Calculate the baseline

Let's say you publish 1,000 articles per month across fashion, culture, and lifestyle content. Each article should link to 4-6 contextually relevant products from your catalog of 15,000 items. Currently, editors spend about 5 minutes (or more!) per article searching your product catalog, reading product descriptions, evaluating relevance, and manually linking items.

That's 5,000 minutes monthly. 83 hours. At $75/hour loaded rate, that's $6,225/month just on manual product selection.

But the real cost is opportunity cost. When editors rush, they pick obvious products or skip this step entirely. About 40% of articles get generic or no product recommendations. That's 400 articles per month with zero conversion potential.

Start by documenting this as your baseline.

Week 2: Validate with a proof of concept

Before building anything, prove AI can actually eliminate this chore. Spend three days testing whether AI can match products to content contextually.

Do the following:

- Use ChatGPT, Gemini, or Claude directly with your content.

- Take 20 existing articles that already have manually-selected products.

- Give the AI the article content and your product catalog (a list with product name and simple description).

- Ask it to recommend 4-6 relevant products.

- Compare AI recommendations to human-selected products.

Measure:

- Does AI select contextually relevant items?

- Does it understand topic relationships (streetwear article → specific sneaker models)?

- Would you publish these recommendations?

For product matching, you'll likely see 75–85% accuracy compared to human selection, better discovery of niche relevant products editors might miss, and the ability to generate recommendations in under 10 seconds instead of 5 minutes.

Do the maths; build the business case

Calculate potential savings. If AI reduces product selection from 5 minutes to 30 seconds (including review), that's 90% time savings. Monthly: 83 hours becomes 8.3 hours. That's $5,603/month saved. Minus the sub $100 cost in AI usage for this type of automation.

More importantly, you can now add quality product recommendations to all 1,000 articles instead of skipping 400, converting 2.5x more traffic.

Critical decision point: If your spike doesn't show at least 70% quality and potential for 50% time savings, stop. Pick a different chore or fix your infrastructure.

Don't continue investing in AI that isn't eliminating friction.

Week 3: Build the minimum viable automation

The spike worked. Now build the simplest production implementation that delivers value.

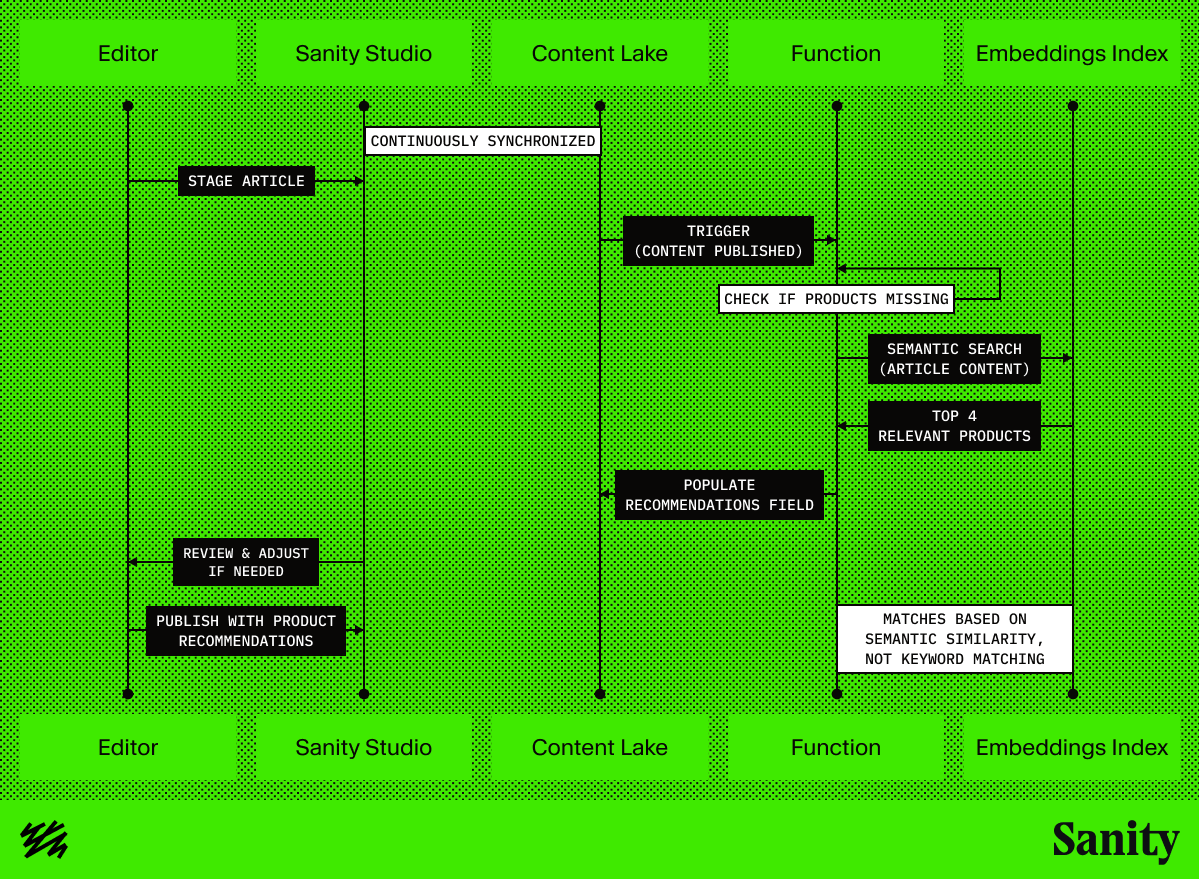

For contextual product recommendations, this means implementing a function that triggers when an article is published. If your system doesn't have functions built-in, then you can achieve this with webhook trigger and API-calls too.

In this example, the function checks if product recommendations are missing, queries your embeddings index for semantically similar products, selects the four most contextually relevant items, and automatically populates the recommendations field. Editors review the suggestions and adjust if needed before final publish.

Here's how the automation workflow looks:

The implementation above uses Sanity Functions with Embeddings Index. An embeddings index is a kind of database that stores content as the semantic vectors, which is what AI uses under the hood. This comes as part of the Sanity platform. In other systems you might have to store the embeddings elsewhere (pgvector, pinecone, etc). If you use Sanity, you can also use Agent Actions to generate the semantic search query based on the article.

This minimum system should ideally take about 5 days to build and deploy:

- 2 days building and testing locally

- 1 day integration testing with real content

- 2 days deployment and monitoring.

You're not building comprehensive UI or optimizing for scale. You're building the simplest thing that works.

The complete auto-tag function recipe, including code and deployment instructions, is available at Sanity's function recipe exchange. The implementation follows this pattern: trigger on content publication, analyze with AI, patch document with results, log for auditing.

Week 4: Measure and iterate

Deploy to production with careful monitoring. For the first week, review every AI-generated tag. Track: quality scores, time savings, team adoption rate, edge cases where AI struggles.

After one week, you'll see patterns. AI might consistently miss certain content types, over-tag specific categories, or create tags that are technically accurate but don't match your editorial voice. Adjust the instructions to the AI, add examples to improve accuracy, refine validation rules.

By week four, you can measure actual impact:

| Metric | Before AI | After AI | Improvement |

|---|---|---|---|

| Time per post | 5 minutes | 30 seconds | 90% faster |

| Tags per week | 60 posts | 60 posts | Same volume |

| Weekly hours | 5 hours | 0.5 hours | 4.5 hours saved |

| Consistency | 75% | 90% | +20% |

| Monthly cost | 83 hours × $75 = $6,225 | $75 + $75 AI = $698 | $5,527 saved/month |

That's 74.7 hours monthly freed up. 896 hours annually. 0.43 FTE of capacity created without hiring. That's 896 hours for strategic content initiatives instead of manual product linking. Plus measurable revenue impact from better product recommendations reaching 400 more articles monthly.

That's measurable, defendable, repeatable value.

Building your operations foundation

If you succeeded in 30 days, you've proven something important: your team can eliminate expensive chores systematically. You have working code, measured impact, and organizational momentum.

The next chore to eliminate depends on your audit from week one. Common high-value targets after metadata generation: style guide validation, content gap analysis, translation workflows, alt text generation, cross-referencing automation.

Each eliminated chore builds your operations foundation. Sean Grove from OpenAI framed the future clearly: "All of us should have dozens of agents in our pocket running, working for us right now. When machines can build anything, articulating what's worth building becomes your competitive advantage."

This is the "Act 2" transformation that Sanity's co-founders Magnus Hillestad and Simen Svale Skogsrud described at Everything *[NYC].

The two acts of AI adoption

Act 1: Make old things cheaper

Using AI to do current work faster.

Act 2: Rewire how you operate

Enabling capabilities that weren't possible before.

You don't start with Act 2. You build toward it by eliminating friction systematically until the accumulated improvements enable new capabilities. loveholidays demonstrates this progression. They started using AI to generate descriptions faster (Act 1), which let them cover 30x more properties (scale), which enabled market expansion that wasn't previously feasible (Act 2). Same content strategy throughout. Systematically better operations.

The companies building for Act 2 aren't the ones with the boldest AI strategies. They're the ones eliminating the most friction, fastest. They understand that when AI can execute anything, clear specifications and structured content become the scarce resources.

Your 90-day path forward: Month 1 eliminated one chore (product recommendations) and saved 75 hours monthly. Month 2 eliminates a second chore (metadata tagging) and saves an additional 40 hours. Month 3 connects these chores into an automated workflow. By month 3, you're saving 115 hours monthly, you've built operational momentum, and you've created 0.66 FTE of capacity without hiring.

That's not AI theater. That's operational improvement that compounds into competitive advantage.

Ready to implement?

Book a demo to see how Sanity's AI infrastructure makes this possible, or join the Content Agent beta to get early access to schema-aware AI capabilities. Share your implementation story in our community Slack and help us build the patterns that make AI actually work for content operations.

Resources

Conference Videos: Watch all talks from Everything *[NYC] including David's 8-minute demo, the workshop showcase, and automation panel. Go here for full recap.

Function Recipes: Complete implementation examples including auto-tag, brand-voice-validator, and more at sanity.io/exchange

Community: Join the #content-agent channel in Sanity's Discord for implementation help and to share what you build at slack.sanity.io

Content Agent: Get early access to schema-aware AI capabilities at sanity.io/blog/content-agent-free-preview

Agent Actions & Functions: Learn about the building blocks at sanity.io/blog/agent-actions and sanity.io/blog/compute